Cooperative Systems of Teams of Robots and Humans

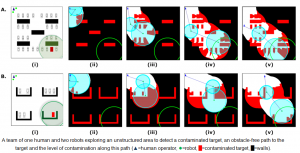

The following scenario describes the significance of a Cooperative System of Teams of Robots and Humans: A team of one human (with wearable equipment) and two robots is called to detect a contaminated target (red box in the bottom-right corners of snapshots (i)) which is known to be located within the green circle (bottom right) at a parking lot. The two robots have different visibility abilities (shown as cyan circles centred on the two robots) and there are cars parked within the lot whose locations are not known. The mission the human/robot team is called to accomplish comprises of the following tasks:

- T1. Have one of the robots approaching and detecting the location of the contaminated target.

- T2. Create an accurate visual map of an obstacle-free path from the parking lot’s entrance to the contaminated target’s location and make sure that the mapped area is “as large as possible” (the white areas in the following figure corresponds to the ones accurately mapped by the two robots).

- T3. Identify the contamination levels along the obstacle-free path so as to decide which are the safe areas for the human to enter. As in task T2, make sure that the contamination maps cover an area around the obstacle-free path that is “as large as possible”.

- T4. Make sure that each of the team members has line-of-sight with at least one other member and they move to locations that maximize the total Signal-to-Noise-Ratio (SNR) so as to optimize reliability of communication.

- T5. Both robots should detect and avoid colliding with the parked cars (whose locations are not known) or the walls (whose locations are assumed known).

- autonomously decides what each of the robots should do (by automatically exploiting the different abilities and skills of the two robots)

- controls fully autonomously the robots to accomplish these high-level actions.

Moreover, while the system autonomously assigns and navigates the two robots to accomplish tasks T1-T3, it makes sure that they are coordinated so that communication remains reliable and accurate (task T4) while they detect and avoid colliding with unknown obstacles (task T5).

It has to be emphasized that no tedious and/or ad-hoc design was required in order to get the solutions shown in Figure 1. All the system needed was to

- correspond each of the tasks T1-T5 to an appropriate objective criterion and

- provide some parameters describing the abilities and limitations of the human and the robots (maximum speed, visibility properties, sensors and communication noise, etc).

To better understand the way the system of Figure 1 operates, please note that the particular objective criteria employed are shown below. All the system had to do is to control the robots and guide the human operator so that the summation of these criteria (i.e., a combined criterion that is equal to J1+J2+J3+J4+J5) is optimized (minimized).

| (T1) One of the robots approaching the contaminated target |

|

| (T2) Create an accurate visual map of an obstacle-free path and maximize the mapped area | |

| (T3) Identify the contamination levels along the obstacle-free path and maximize the area of the contamination map. | |

| (T4) line-of-sight with at least one other member and optimize SNR |

|

| (T5) Obstacle avoidance | If distance between robot and obstacle less than a safety distance then |

| where |

|

There are three important remarks regarding the above criteria and their correspondence to high-level actions (tasks):

- The first remark has to do with the fact that each of these criteria does not depend on the particular application (mission) the robots have to accomplish. For instance, in any other mission where one or more of the tasks T1-T5 has to be accomplished, the corresponding objective criterion will remain the same, even if the number/type of robots is different than that of the scenarios of Error! Reference source not found..

- The analytical form of the objective criteria J1-J5 is not possible to be constructed as they depend on the unstructured environment characteristics (e.g., locations of the parked cars, location of the target, etc) as well as the characteristics (capabilities and limitations) of the robots and humans. However, although the analytical form of the objective criteria J1-J5 is not possible to be constructed, the particular value of these criteria at each time-instant of the experiment can be calculated using sensor measurements or signals internal to the system modules.

- Last but not least, it has to be stretched out that any methodology that achieves to (simultaneously) optimize the objective criteria J1-J5, it will have to optimally exploit the different skills and abilities of the members of the human/robot team (i.e., to assign to each of the team members “to do what they can best do”).

Having the above scenario in mind, let us see how the overall system operates. In the heart of this system lies the Control of Heterogeneous Agents (ContrHA) module. The purpose of this module is – by receiving high-level actions from the humans (e.g., tasks T1-T3) – to fully-autonomously “spread” and navigate the robots to different areas and tasks so that:

- the operators’ instructed high-level actions are accomplished by

- making sure that each of the robots is assigned to “do what it can best do”

- and, moreover, making sure that the robots do not violate physical constraints (e.g., obstacle avoidance) while their motion optimizes the communication and localization accuracy of the overall system.

The key idea behind the development of such a module is based on CAO. No analytical knowledge or other complicated calculations of the particular optimization criterion or the environment dynamics are required by the CAO algorithm: all CAO needs is to know whether the current robot team’s configuration has improved or not the value of the optimization criterion associated with the objective the robots have been deployed for.

Within the system, we aim at leveraging CAO one step further by expanding it and enhancing it so as to be able not only to autonomously navigate teams of robots performing a certain task (e.g., optimal surveillance coverage or exploration) but to also take care of complex SA missions involving cooperating teams of humans and robots, by autonomously and dynamically assigning each of the robots to different sub-tasks (e.g., by having some robots performing a sub-task while others perform other sub-tasks) and by making sure that each of the robots is assigned to “do what it can best do”. The Human-Robot-Interaction (HRI) module will be also used towards such a purpose. The operation of this module is as follows: using HRI methodologies and tools, the human operators will be able to instruct the robot team by providing the high-level actions the robot team has to take. These high-level actions will then be transformed by the HRI module into appropriate optimization criteria for the robot team to optimize. The information received by the system’s sensors and cognition modules will be then used to evaluate in real-time these optimization criteria in order

- for the ContrHA module to autonomously assign and control the robots

- for the human operators to monitor the success of the robot team to accomplish the instructed objectives and – if and whenever needed – to modify the instructed high-level actions or instruct new ones.

Additionally, the HRI module supplements such baseline uni-directional control methods with proactive action selection methods, by researching affordance-based visualizations (essentially providing the user with the options that the robot team predicts it is capable of achieving), and human-intention prediction methods.

![Rendered by QuickLaTeX.com \[J4 = \sum\limits_{i = 1}^{N_A } {\mathop {\min }\limits_{j:i{\kern 1pt} has\,LOS\,with\,j} \left\{ {\left. {\left\| {SNR(x_i^A ,x_j^A )} \right\|} \right\}} \right.}\]](https://convcao.com/wp-content/ql-cache/quicklatex.com-d38095d6f18e6dd1982440496a3c2607_l3.png)